When Psychosis Meets the Algorithm

A Mississippi arson reveals the dangerous collision between mental illness and online radicalization

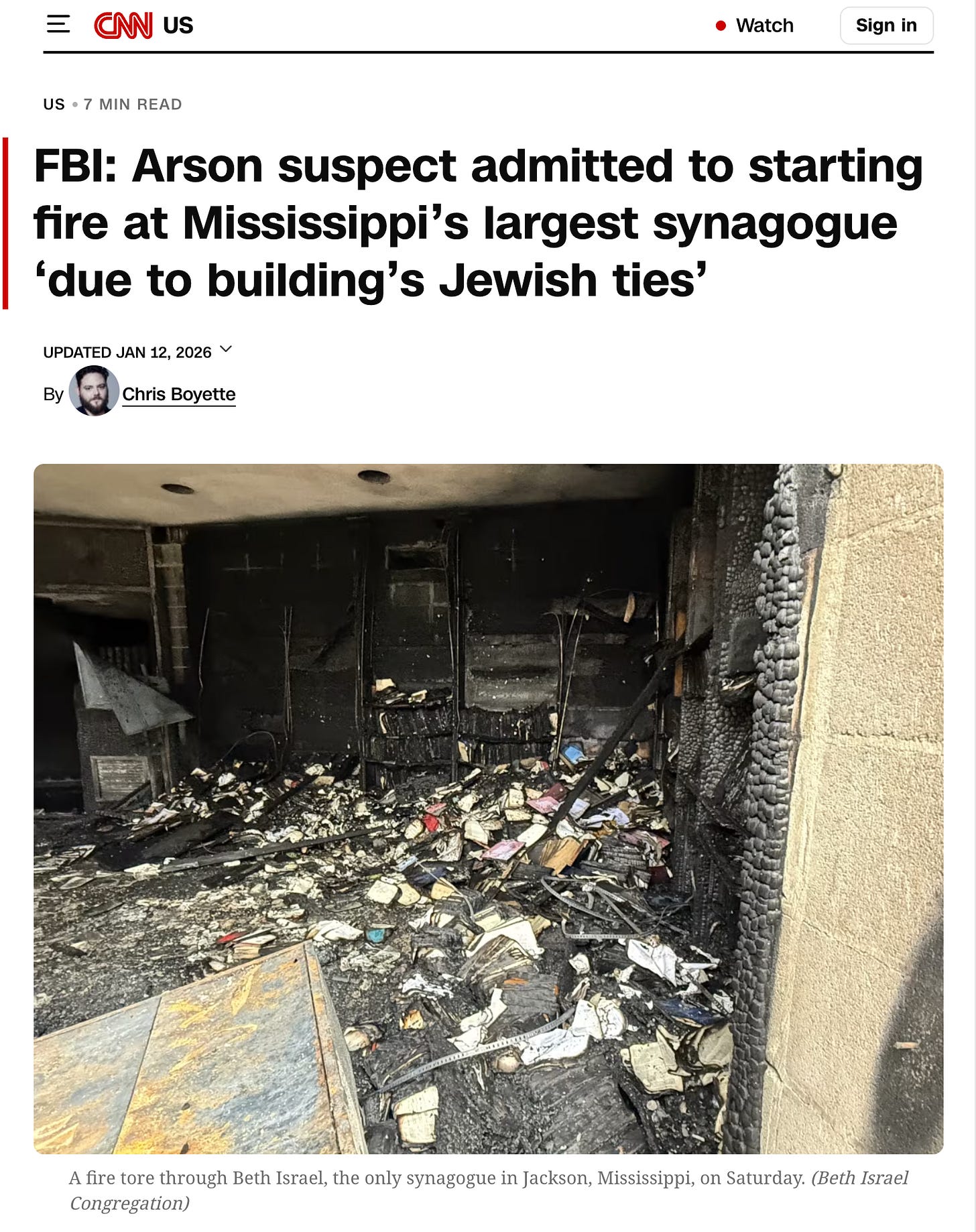

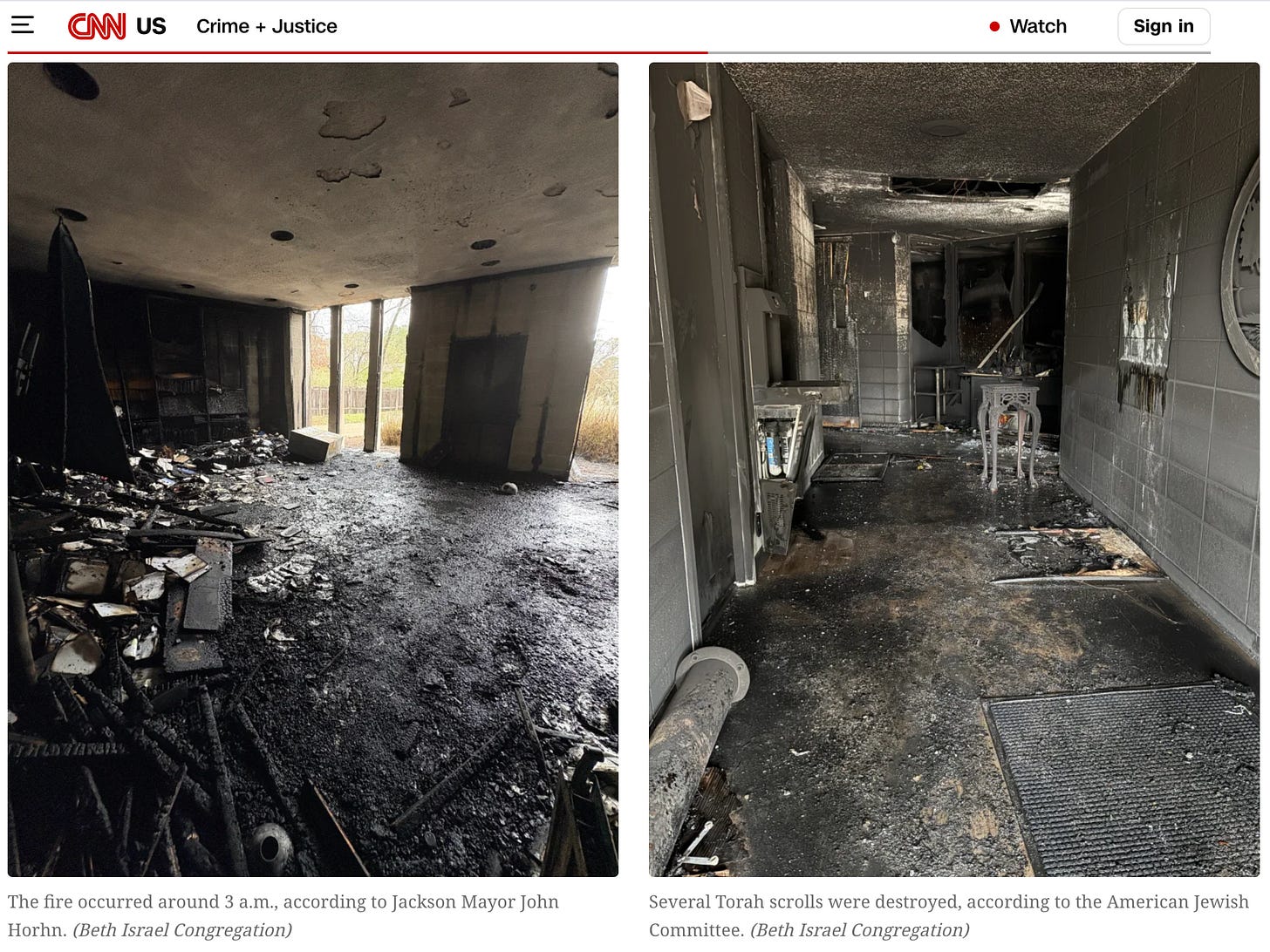

Two weeks ago, a nineteen-year-old named Stephen Spencer Pittman allegedly set fire to Jackson, Mississippi’s Beth Israel Congregation, the city’s only synagogue. Hours earlier, he’d posted antisemitic content to social media.

NPR reports:

Pittman’s father contacted the FBI hours after the fire and said his son had admitted to starting it, the complaint alleges. It states that Life360 map data from Pittman’s cell phone and text messages to his father further corroborated his confession.

According to the FBI, Pittman traveled from his home early Saturday and stopped at a gas station, before heading to Beth Israel. He then texted his father a picture of the back of the synagogue and a series of messages, which read, “There’s a furnace in the back,” “BTW my plate is off,” “Hoodie is on,” and, “they have the best cameras.”

Pittman's father pleaded for his son to return home. Pittman replied that he was "due for a homerun," according to the affidavit. The criminal complaint states that Pittman went on to text his father that he had done his "research." The FBI says Pittman's father noticed burns on his son's ankles, hands, and face and confronted him:

The government’s complaint alleges that when he confessed to his father, with obvious burn injuries visible on his hands and body, he laughed and said he “finally got them.”

Ten days later, standing in federal court with bandaged extremities, Pittman pleaded not guilty. FBI Special Agent Ariel Williams’s testimony revealed something as disturbing as the attack itself: escalating psychiatric deterioration that everyone around him witnessed but no one knew how to stop.

His mother told investigators the family pets were afraid of him. She’d considered locking her bedroom door at night. After he returned on winter break, his behavior changed so dramatically that when his father tried to correct him for saying something offensive, Pittman “bowed up” in his father’s face.

Members of his gym even heard him say he wanted to burn a synagogue.

That laugh of his should haunt us. Not because it confirms Pittman as a monster, but because it suggests a young man whose break from reality coincided with immersion in online antisemitism, creating a perfect storm that existing frameworks for hate crimes and terrorism fail to capture.

Disclaimer: This analysis is based on publicly available information including news reports, legal filings, and social media posts. The author is not a mental health professional, attorney, or clinician, and cannot diagnose individuals nor provide legal counsel. Clinical assessments require direct examination by qualified psychiatrists or psychologists; legal determinations require licensed attorneys and judicial review.

The Clinical Picture

Pittman’s trajectory tells an unusual story. I was able to locate quite a few mentions on social media praising his sports prowess amid photographs of a typical all-American youth. He was an honor roll student at St. Joseph Catholic School, a National College Athletic All-Academic Team member, and spent a few semesters at Coahoma Community College before the attack. High achievers don’t typically abandon their tracks without reason.

Mississippi Today reported that, until recently, Pittman had “mainly used his numerous social media accounts to post about baseball, Christianity and his exercise routines.” My review of his X (Twitter) account confirmed this. Notably, he showed little interest in engaging with anyone online—no back-and-forth with peers, no community building, just broadcast. His social media was a limited monologue.

Friends said he “changed a lot” in recent years. He began bragging about money, promoting questionable health trends he called a “Christian diet.” There were videos of himself cracking raw eggs into his mouth on Snapchat. Videos of himself frantically pumping iron at the gym. Discussions of “testosterone optimization.” Linkages to online subcultures where “maxxing” masculinity, finances, and fitness bleed into nationalist religious fervor. His Instagram bio read “Entrepreneur” and “Lawyer of God.”

On December 5th, thirty-six days before the attack, Pittman purchased the domain for “One Purpose,” a website offering “Scripture-backed fitness, brotherhood accountability [and] life-expectancy maxxing” for ninety-nine dollars monthly. The site featured God’s ineffable name in Hebrew, references to Jewish fast days, and the seven biblical species of Israel.

Then he came home from Coahoma Community College in northwest Mississippi for winter break. His parents saw their son transform into someone they feared. Their household pets, being animals that are sensitive to behavioral cues often missed by humans, became afraid. The mother who’d raised him for nineteen years considered locking her bedroom door. He started day trading, and physically confronted his father. Others heard him utter racist threats in their presence.

The cognitive disintegration was visible to everyone in real time, but was stopped by no one. At nineteen, Pittman sits at peak age for first-episode psychosis in males. Schizophrenia incidence shows a steep increase culminating at age 15 to 25 years in males. Up to 75 percent of patients with schizophrenia experience a prodromal phase: subtle changes in perception, thinking, and functioning extending from weeks to years before full symptoms emerge.

The checklist reads like Pittman’s life: Academic dysfunction. Personality changes. Bizarre social behavior. Inappropriate affect. Grandiose thinking. Unusual preoccupations. Paranoid ideation. Behavioral disorganization. Changes so severe that family members become afraid.

When parents are considering locking bedroom doors, that isn’t radicalization. It’s psychiatric emergency.

“Synagogue of Satan”

Pittman’s phrase sits at the intersection of white nationalist propaganda and religious delusion. Religious delusions occur in between one-fifth and two-thirds of patients with delusions, and prevalence rates vary dramatically by country—from 63% in Lithuania to 24% in England to 7% in Japan. In societies where religion plays an important role in everyday life, people with psychiatric disorders tend to show religious delusions more often than in non-religious societies. The content of delusions is determined by cultural context. In Christian-majority societies, common themes include apocalyptic imagery, supernatural persecution, and religious outgroups as existential threats.

Pittman’s inappropriate affect, laughing while confessing to a violent felony, is textbook psychotic disorder. So is the operational chaos: incriminating content posted hours before the attack, texting his father from the scene, his burned cell phone left behind, and severe self-injury to his face, hands, and ankles. This isn’t a picture of methodical ideological terrorism. It’s someone whose relationship with reality has catastrophically failed.

Persecutory delusions correlate strongly with violence risk, particularly when combined with anger. Re-analysis of the MacArthur Violence Risk Assessment Study found that threat delusions of being spied upon, persecutory delusions, and delusions of conspiracy were mediated by anger due to delusional content on the pathway to serious violence. The closeness in time, or temporal proximity, between the delusion and the act, is an important factor. When someone acts violently during or immediately after experiencing a delusion, there’s stronger evidence the delusion caused the violence, versus someone who holds delusional beliefs for months but acts for unrelated reasons.

Pittman’s timeline appears compressed: on Saturday, January 10 at 12:52 a.m, he posted an antisemitic video on Instagram.

“A Jew in my backyard, I can’t believe my Jew crow didn’t work,” an animated Disney princess-style character said to a short yellow figure with a long nose. “You’re getting baptized right now.”

Roughly two hours later, he drove a few miles to commit the arson, catching himself on fire in the process. He drove himself to the hospital for treatment, and was arrested later that evening after his father, who had seen his burns and heard his laughing confession, contacted law enforcement. Two days after that, he replied "Jesus Christ is Lord" when the judge read his rights. The delusion wasn’t incidental background noise. It was actively driving events.

The Radicalization Machine

Pittman wasn’t reading Revelation in isolation. Before October 7th, 2023, antisemitic content lived in dark corners. After Hamas attacked, UK authorities documented dramatic increases in antisemitic incidents.

The ADL documented 8,873 antisemitic incidents in the United States in 2023, more than doubling the 3,698 reported in 2022. Similarly, the British Community Security Trust recorded 4,103 incidents in the UK, up from 1,662 in 2022. The British Community Security Trust recorded 2,019 antisemitic incidents in the first half of 2024 alone (the highest six-month total ever) before declining to 1,521 in the first half of 2025.

This remains historically elevated: the 2025 figure is still 84% higher than the 823 incidents recorded in the first half of 2022. After October 7th, content that once only lived in dark corners began to flood mainstream feeds—algorithmically amplified, packaged as edgy humor or political commentary, and normalized through sheer repetition. For someone like Pittman who was already showing signs of cognitive deterioration, this was the water he swam in.

Hours before the attack, Pittman shared content from “jew_inbackyard_daily,” an Instagram account posting antisemitic material: caricatured figures with exaggerated features stealing bags of money, getting pushed into pools, “baptized.” The account name itself signals industrialized hate production.

Research shows a 413% rise since 2020 in the internet playing the primary role in the radicalization process for those under the age of 30 compared to the previous decade, according to the PIRUS database. Bad actors on platforms like 4chan, 8chan, Parler, Reddit, Gab, TikTok, Truth Social, X, and Instagram employ memes, dark humor, and pseudo-intellectual discourse to normalize racist and antisemitic beliefs, particularly targeting young men.

The Christchurch killer explicitly encouraged followers to “make memes” about his attack, understanding that internet culture perpetuates ideology more effectively than traditional propaganda—a strategy sometimes called “memetic warfare.”

What happens when someone immersed in this ecosystem begins experiencing prodromal psychotic symptoms? Emerging paranoia, difficulty distinguishing reality from digital content, religious preoccupations. These don’t develop in cultural vacuum. They incorporate available material.

The Diagnostic Trap

Was Pittman experiencing genuine psychotic delusions, or what researchers call “extreme overvalued beliefs”: rigidly held convictions that motivate terrorism but aren’t delusional? The distinction matters legally and clinically, but the boundaries blur. Extreme overvalued beliefs are shared by subculture members, maintain internal logic, and develop through social learning rather than psychotic processes.

The difference isn't whether the belief is abhorrent, it's whether it's culturally transmitted or idiosyncratically generated. A neo-Nazi who believes in an international Jewish conspiracy holds an extreme overvalued belief: false and hateful, but shared by a subculture and spread through social learning. Someone who believes his specific neighbor is a Jewish agent implanting thoughts through the television holds a delusion. Conspiracy theories are taught. Delusions emerge from a breaking mind.

But what about someone who builds a fitness website steeped in Hebrew religious content, then burns a synagogue and laughs about “finally getting them”?

Such an incongruous trajectory suggests neither pure ideology nor pure psychosis, but a pathological intersection of both: emergent psychosis shaped by online radicalization with psychotic symptoms providing the disinhibition necessary to act on violence suggested by extremist ideology. Psychosis breaks reality. Ideology fills the void. Algorithms reinforce it constantly.

Arson and the Mind

Swedish registry data reveals dramatically elevated odds ratios for arson convictions among individuals with schizophrenia: 22.6 for males, 38.7 for females. These risk estimates are higher than those reported for other violent crimes and place arson in the same category as homicide as crimes most strongly associated with psychotic disorders.

The symbolic dimension matters. Fire destroys, purifies, attracts attention. In religious delusions, it represents divine judgment. For someone experiencing psychotic-level religious persecution beliefs, burning a “synagogue of Satan” may feel like spiritual warfare.

This is the same synagogue that was bombed by the Ku Klux Klan in 1967 during the civil rights era. The attack allegedly struck the same wing of the octagonal building that burned in that earlier attack.

What We’re Missing

Could this have been prevented? At what point does “concerning behavior” become “imminent risk requiring intervention”? We have no system for this.

Research shows social media activity captures objective markers of psychotic relapse. One study achieved 71% specificity predicting psychiatric hospitalizations from Facebook posts, identifying linguistic shifts in the month before relapse: increased swearing, anger, and death-related language; more first-person pronouns; fewer references to work, friends, and health.

Could similar patterns identify individuals in prodromal phases, before a first psychotic break? Research demonstrates machine learning can predict conversion to psychosis with 93% accuracy by analyzing speech for two markers: low semantic density (vagueness) and increased talk about voices and sounds.

The challenge isn't technical, per se. It's ethical and legal. With that said, who might already be doing it?

The State Actor Question

A colleague with decades in information operations raised a hypothesis: what if hostile state actors are identifying vulnerable individuals and accelerating their radicalization?

Russian intelligence has demonstrated sophisticated social media manipulation. But the true infrastructure is GRU Military Unit 54777, Russia’s formal psychological operations command.

Established in 1994 as successor to the Soviet Special Propaganda Directorate, Unit 54777 operates in peace and wartime with documented involvement in the Crimea annexation, Syria, Ukraine, European elections, and COVID-19 disinformation. Unlike Soviet-era units activating only during military operations, 54777 conducts continuous psychological warfare. Its operations integrate cyberspace with traditional PSYOPS: “information confrontation,” a fusion of cyber-technical and cyber-psychological attacks with the goal of eroding the adversary’s will.

They operate through social media and front organizations (InfoRos, Institute of the Russian Diaspora), with subordinate PSYOPS units in every Russian military district. The “Aquarium Leaks” (declassified GRU documents) reveal a consistent strategy: identify contentious social issues, flood platforms with divisive content through bot networks and troll armies, and amplify societal tensions. Not to win debates, but to paralyze societies through internal conflict.

Industrial-scale psychological operations require precision targeting. Enter surveillance capitalism. Research establishes comprehensive personality profiles can be inferred from as few as 300 Facebook “likes” with spouse-level accuracy. Cambridge Analytica exposed this at scale: combining psychological tests from 270,000 users with machine-learning models, the firm built personality profiles for over one hundred million U.S. voters, then used algorithmic microtargeting to tailor messages to individual psychological vulnerabilities.

Digital footprints predict political orientation, sexual orientation, ethnicity, intelligence, and crucially, psychological states: depression, anxiety, emotional vulnerability. Facebook offered advertisers ability to target users “in moments of psychological vulnerability,” identifying when young people feel insecure and stressed.

This is psy-ops at scale. Personality-tailored messaging increases persuasiveness by approximately 40% compared to untargeted content. Studies show even warnings about microtargeting fail to reduce message persuasiveness for personality-matched content. The manipulated cannot perceive the manipulation.

Social media platforms collect massive behavioral data: linguistic patterns, posting frequency, content shifts, engagement metrics, temporal behaviors. The capability architecture exists to identify individuals showing early signs of psychiatric vulnerability, apply personality profiling to understand specific psychological architecture, and deliver personality-optimized radicalization content through algorithmic amplification.

This remains theoretical vulnerability, not documented practice. While Intelligence Community assessments on foreign malign influence focus on election interference, disinformation campaigns, and diaspora targeting, they haven’t discussed the exploitation of psychiatric vulnerabilities (nor cognitive warfare, more broadly.)

However, the operational capability unquestionably exists, and some of the recent clusters of “random” or “unexplained” attacks by mentally ill individuals in the United States and elsewhere could fit this hypothesis within the framework of non-linear warfare.

Technical components (psychological profiling, microtargeting, radicalization content delivery, vulnerability identification) have all been demonstrated in commercial and intelligence contexts. The intersection of online radicalization and mental illness sits between terrorism research and psychiatry and remains understudied, creating blind spots where such operations are able to develop undetected and flourish.

Whether through deliberate state actor targeting or emergent properties of algorithmic amplification, vulnerable individuals experiencing early psychotic symptoms are being exposed to extreme content precisely when least equipped to evaluate it critically.

The Forensic Dilemma

Pittman faces federal arson charges and state charges with hate crime enhancements. At his January 20th bond hearing, Magistrate Judge LaKeysha Greer Isaac denied bond: “No conditions would ensure community safety.” Trial is set for late February. He faces five to twenty years federal, five to thirty state—up to sixty with hate crime enhancements.

Given suspected psychotic symptoms, substantial basis exists for competency evaluation. When the judge read rights during his initial appearance via video from his hospital bed, Pittman responded: “Jesus Christ is Lord”—possibly indicating tangential thinking or inability to focus on legal proceedings. He appeared with bandaged hands and ankles, carrying a Bible, and said little to his attorney through two hours of proceedings.

The insanity defense proves more complex. Federal law requires proving the defendant “was unable to appreciate the nature and quality or wrongfulness of his acts.” Premeditation and scant signs of operational security such as removing license plates and wearing a hoodie suggest preserved appreciation of consequences. But the text messages, self-immolation, laughing confession, and social media posts before and after the arson indicate profoundly impaired judgment.

Public defender Mike Scott argued Pittman suffered third-degree burns, incarceration could risk his health, posed no community danger. Prosecutor Matthew Wade Allen countered Pittman posed “serious risk he will obstruct justice or threaten, injure or intimidate witness or juror.”

Judge Isaac agreed.

Treatment and Prevention

Early treatment for first-episode psychosis dramatically improves outcomes. But for someone like Pittman, treatment must also address the ideological content of his beliefs—and traditional psychosis programs have no framework for this.

Standard treatment for delusions involves medication and therapy to help patients reality-test their beliefs. Deradicalization programs help people exit extremist ideologies. But what happens when the two overlap? How do clinicians distinguish a psychotic delusion requiring psychiatric treatment from a sincerely held extremist belief requiring deradicalization? Where does illness end and ideology begin? This intersection remains uncharted clinical territory.

The integrated approach Pittman needs essentially doesn’t exist.

Unfortunately, his case isn’t isolated. As a recent example, Australia’s security service ASIO reported in its 2025 Annual Threat Assessment finding a twelve-year-old self-professed neo-Nazi discussing on social media how to livestream a school shooting before moving to religious targets. ASIO’s intelligence enabled U.S. authorities to intervene.

The director-general noted a disturbing pattern: many radicalized minors “did not have a clear or coherent ideology beyond an attraction to violence itself.”

What needs to happen?

Intervention pathways for families witnessing psychiatric deterioration. NAMI’s crisis intervention resources provide guidance, but we need systematic protocols that empower families to act before crisis becomes catastrophe.

More interdisciplinary research at the intersection of mental health and radicalization. Current studies remain siloed. The EU Radicalisation Awareness Network’s practitioner handbook presents a start, but NIJ-funded research confirms the need for integrated approaches.

Early intervention services for psychosis must develop capacity to assess ideological radicalization. NIMH’s EPINET initiative delivers a framework, but clinicians need training to recognize when delusional content intersects with extremist recruitment.

Platforms must acknowledge their role in algorithmic amplification of extreme content. The Center for Countering Digital Hate’s STAR Framework outlines principles for Safety by Design, Transparency, Accountability, and Responsibility. The ADL’s research on algorithmic amplification documents the problem; proposed legislation offers a regulatory path forward.

Frameworks for identifying vulnerable individuals without creating new surveillance states. Emerging research on AI that can infer mental states from behavioral patterns (Affective Computing) highlights core tensions between ethical care and patient privacy and autonomy.

Funded treatment capacity instead of prisons as de facto psychiatric hospitals. The Treatment Advocacy Center documents how 383,000 individuals with severe mental illness are incarcerated versus 38,000 in state psychiatric hospitals. NAMI’s policy position and the Prison Policy Initiative’s research outline evidence-based alternatives to this.

The Uncomfortable Conclusion

Antisemitism isn’t a symptom of mental illness. The vast majority of antisemitic violence is perpetrated by individuals without mental illness, motivated by ideology, hatred, political extremism. Attributing hate to mental illness risks excusing ideologically-motivated violence while reinforcing unfair stigma.

But some percentage of cases (we don’t know how many, because I’m not sure anyone is looking systematically yet) represent this dangerous intersection: vulnerable individuals experiencing psychotic symptoms, immersed in online radicalization, and lacking insight to seek help or support systems to intervene.

These cases don’t fit existing categories: Not pure mental illness. Not pure terrorism. They are hybrid threats at the intersection of psychiatry, counterterrorism, technology policy, and public health. Everyone around Pittman saw what was happening, but no one could stop it.

His laugh wasn’t evil triumphant. Those behavioral changes weren’t simple signs of ideological radicalization. That incongruous website wasn’t some act of strategic deception. His mother’s fear wasn’t an overreaction. These acts were the signatures of a mind fragmenting in real time, with unregulated algorithms and hateful ideology filling the cracks.

The question isn't simply whether hostile state actors are deliberately exploiting psychiatric vulnerability for radicalization; capability and motive exist.

The more crucial question is whether we're willing to acknowledge that the digital environment Big Tech has created has effectively automated the same process: algorithmic amplification of extreme content, precision targeting of vulnerable individuals, and radicalization at scale. Either way, we’re unprepared.

Pittman’s trial begins February 23rd. Perhaps it will answer legal questions about guilt and responsibility. But it won’t answer what matters most: How many others are fragmenting right now? How many families are watching the same deterioration with no idea where to turn? How many more Pittmans are fragmenting right now, and what are we doing about it?

If you or someone you know is struggling with mental health issues, the National Alliance on Mental Illness (NAMI) helpline is available at 1-800-950-NAMI (6264).

If you have concerns about someone’s radicalization, the FBI accepts tips at tips.fbi.gov.

This publication runs on reader subscriptions. If this analysis demonstrated value, please consider becoming a paid subscriber to help my research reach more people.